For service providers, a journalism degree and Party membership make ideal content moderators

Content moderating is becoming one of the fastest-growing jobs in China's online news and video sector after Chinese regulators cracked down on apps

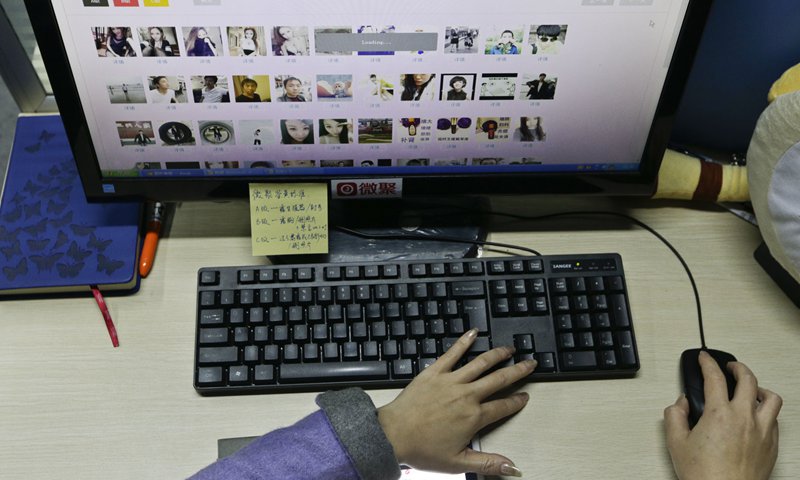

A female moderator screens and filters online photos uploaded by netizens for an internet company. (Photo: VCG)

As the most important line of defense, content moderators say their jobs are boring yet also stressful

To cope with skyrocketing demand, contractors providing content moderation services for online platforms have exploded

Only six months into her job as a content moderator for Yidian Zixun, a Beijing-based news and information aggregator, Li Ou (pseudonym) already felt she had to quit.

Li, 24, said she viewed over 1,000 short videos a day to decide whether these videos, most of which were user-generated, would be allowed online. Although the video platform is in Beijing, she was hired by a contractor 1,200 kilometers away in Xi'an, Northwest China's Shaanxi Province, which provides content-review outsourcing for tech firms in first-tier cities.

The 24/7 job, which requires reviewers to do rotational night shifts, is monotonous and tiresome, Li said, but even the smallest mistake can lead to dire consequences.

"If we accidentally approved videos that had copyright issues or were required to be removed by the government information office, we could be fined and even fired. The contractor may also lose its contract. I often had nightmares about such mistakes," said Li, who earned 4,000 yuan ($637) per month.

Unable to cope with such pressure, Li resigned last June and currently works as a teacher at an educational company.

Content moderating is becoming one of the fastest-growing jobs in China's online video and news sector. Chinese live-video streaming start-up Kuaishou recently announced that it will recruit 3,000 additional content moderators, in addition to its current 2,000.

Jinri Toutiao, China's most popular news app, also said it will expand its content-review team to 10,000 from 6,000. At some video start-ups, the content-review team has become the biggest department in the company, sources say.

The latest hiring spree occurred after China started to crack down on video apps. In April, Kuaishou and Huoshan, a video platform under Jinri Toutiao, were suspended from app stores by China's internet regulator for "vulgar and harmful" content. Both platforms made public apologies and promised to reform their screening methods.

Wei Peng, a research fellow at the Cyberspace Administration of China, told CCTV News, "From what we know, these platforms are not fully performing their duties. The main reason is the lack of content reviewers, which is disproportional to the number of users. Take Kuaishou for example. Over 10.2 million videos are uploaded to the platform each day, but they are reviewed by only 2,000 content moderators."

Low barrier, tough job

Li, formerly a fresh university graduate majoring in journalism, said her six-month experience as a content moderator started with a week-long training period, when she was taught Yidian Zixun's copyright standards and watched documentaries about sensitive historical events in China, including the 1989 political turmoil.

"During our training we watched a lot of videos that were detrimental to China and those that are reactionary. We need to remember these images so we can spot them when we're reviewing the videos. We're not allowed to show others these documentaries," she said.

After passing an exam, she was officially hired. The first thing Li was asked to do every morning is to read the daily directive from the cyberspace authorities in Beijing. "As a news and information aggregator, Yidian Zixun sees little pornographic content. Our top concern is being politically correct," she said.

Fears of mistakenly approving videos that contain forbidden footage are constant, Li said. Her biggest mistake in her short-lived career was when she approved a music video for a Michael Jackson song that was banned in China. For the mistake, she was censured by her supervisor and fined.

Wen Jia (pseudonym), a content-review team leader at a mainstream live-streaming platform, said that as a labor intensive industry, most companies base their content moderating department in second-tier Chinese cities such as Tianjin, Wuhan and Chengdu, where labor costs are lower.

"Fresh graduates majoring in humanities such as journalism or law are preferred, as they are more familiar with historical topics," Wen told the Global Times.

"Many young people apply to work as content moderators because they are fans and loyal users of these video sites," Wen said.

Managing a team of 500, Wen said she must regularly meet up with cyberspace authorities and attended training sessions, held by the State Administration of Press, Publication, Radio, Film and Television (SARFT) thrice a year.

Booming business

While Chinese internet companies are racing to expand their own content-review teams, it is still far from enough to cope up with skyrocketing demand, as authorities are imposing stricter policies to purify the internet.

Thus, many contractors providing content moderation services for online platforms have exploded in recent years. Yang Le, CEO of Yunjingwang, which provides content moderation services, told the Global Times that his business took off last year after the country raised its demands for a clean internet.

Yunjingwang, founded in 2016, currently has 1500 moderators. During peak seasons, such as during the two sessions or the release of new governmental policies, they review "trillions" of posts, voice messages, photos and videos every day, together with artificial-intelligence (AI) tools the company itself invented, according to Yang.

These AI tools, as he introduced, are quite advanced and can analyze whether "sensitive" words like top leaders' names have a bad meaning in the greater context of a post. "We only delete them if they're analyzed to be inappropriate in the dialogue context," he said.

He stressed that real human content moderators are irreplaceable and able to supplement the deficiencies of AI tools.

To hire qualified moderators, Yang's company prefers to recruit Party members and members of the Communist Youth League as well as men hailing from rural areas, who Yang said are more stable.

Whether a candidate is a Party member is also a factor considered during the hiring process for Wen's company. "Being a Party member means the candidate is positive, and as Party members need to write a lot of ideological reports, they can better understand policy changes," Wen said.

While many employees are from the post-1990s generation, Yang said that they "aren't insensitive to politics," and "the trainings offer them a bigger picture which enables them to better understand what the country does to benefit them."

"They used to only get unilateral information online. What we do is to teach them how to look at a single policy from a historical and down-to-earth perspective," he said, citing an example that, for employees from villages, the company will teach them how a particular policy could change their own hometown.

New market niche

Yang noted that his clients now include not only domestic news, internet and live-streaming companies, but also foreign companies, such as Airbnb, operating in the Chinese market. "These foreign companies have a large demand for content moderation as they don't quite know about our rules," he said.

Like Yunjingwang, some contractors have also found a new market niche: short videos and live-streaming videos in the language or dialect of ethnic minorities. Beijing Shining-Esin Technology, for example, currently has around 100 content moderators in Chengdu, Sichuan Province, over half of whom are ethnic Tibetans.

"Compared with Putonghua, our reviewing of Tibetan content is more targeted at political and terrorism related contents," Cristina Wang, its COO, told the Global Times.

Formerly in the software testing business, in 2016 Wang noticed that many clients started to ask if she could provide content moderating services for live-streaming videos. "Seeing this demand, we gradually shifted our business model," she said, adding that her clients are from mainstream live-streaming platforms who worried that user-generated Tibetan-language content, if not monitored, will cause them trouble.

Yang said his company also has moderators who understand Tibetan dialects and Uyghur language. But the company does not let moderators from a specific ethic minority group review related content.

"For instance, we usually don't use Uyghur people to moderate their own language content, but rather we find people from other ethnic groups who can also understand the Uyghur language to monitor such contents to avoid trouble," he said.

Yang revealed that they report directly to police if they find terrorism-related information.

Government-level communications

Wang Sixin, a media law professor at the Communication University of China, said that 80 to 90 percent of all online information is now user generated, which requires more content moderators.

He said that the government should set standards and measures of punishments for companies that fail to do content moderation work. Wang Sixin also noted that "it's important for companies to let the government know what they've done. Otherwise, it will cause misunderstandings between the government and companies," he said.

"Without proper or enough communication, it could draw hostilities from the outside and supervisions from the government," he told the Global Times, noting that tech giants like Toutiao should shoulder "more social responsibilities."

These online platforms now have an offline mobilization capacity, which means that the platform runners should have a prejudgment whether offline gatherings could cause social instability. "They should be wary of it and should timely report users' offline events to the authorities if they detect something inappropriate," he added.

Yang explained that they need more guidance from the government to help them better understand policies. Currently, they can only rely on media professors and mature moderators to analyze new rules and policies from governmental websites.

SARFT held training classes in 2016 and 2017 for moderators who review internet audio-visual art programs to help them comprehend the policies. But Yang said that he hopes in the future there will be more and larger-scale training seminars for companies.

He is calling for the government to listen more to the special problems they are facing in implementing the policies.

"If authorities have ordered to block a person on a specific live-streaming platform, but photos and video clips of him are still being circulated on other social media platforms, we don't know whether we should still delete them," he said.