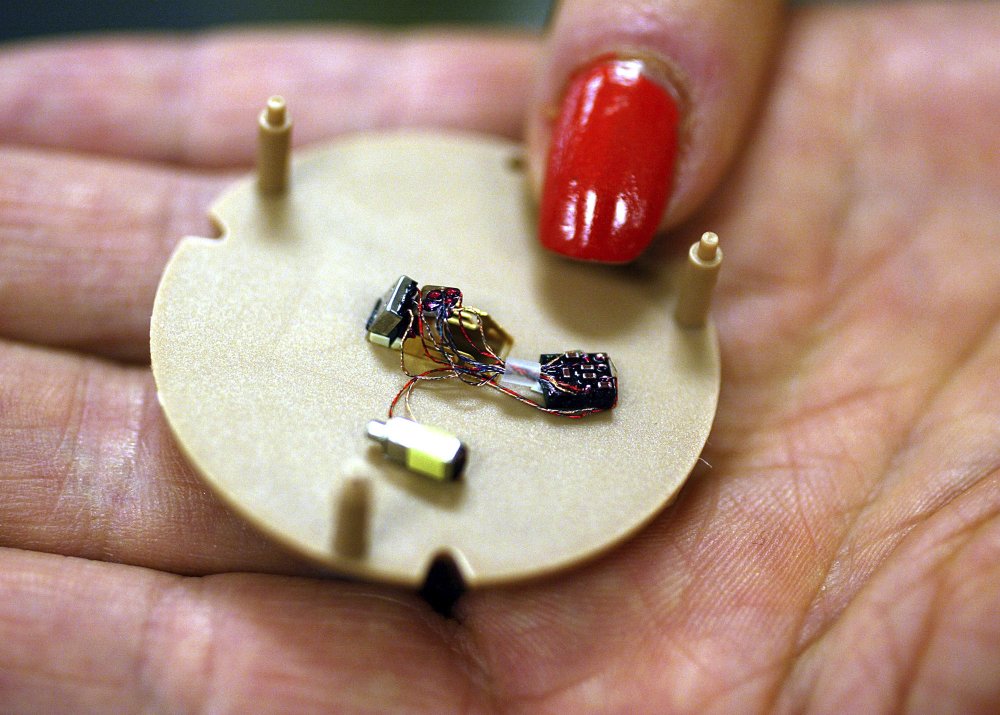

In this July 12, 2004, file photo, a woman holds a hearing aid that uses artificial intelligence in Somerset, N.J. The challenges of making the technology industry a more welcoming place for women are numerous, especially in the booming field of artificial intelligence. (AP Photo)

The challenges of making the technology industry a more welcoming place for women are numerous, especially in the booming field of artificial intelligence.

To get a sense of just how monumental a task the tech community faces, look no further than the marquee gathering for AI’s top scientists. Preparations for this year’s event drew controversy not only because there weren’t enough female speakers or study authors.

The biggest debate was over the conference’s name.

The annual Conference and Workshop on Neural Information Processing Systems, formerly known as NIPS, had become a punchline symbol about just how bad the gender imbalance is for artificial intelligence. Thousands of AI researchers convened in Montreal last week under a slightly tweaked banner — NeurIPS — but with many of the same problems still under the surface.

AI’s challenge reflects a broader lack of diversity in the tech industry. At major tech companies, women account for 20 percent or fewer of the engineering and computing roles. By some accounts, AI’s gender imbalance is even worse: One estimate by startup incubator Element AI shows women making up just 13 percent of the AI workforce in the US.

The challenge has repercussions far beyond career recruitment. Artificial intelligence and a self-training discipline known as machine learning can mimic the biases of their human creators as they make their way into consumer products and everyday life.

“The more diversity we have in machine learning, the better job we will do in creating products that don’t discriminate,” said Hanna Wallach, a Microsoft researcher who is a senior program chairwoman of the conference and co-founder of an associated event for women in machine learning.

AI systems look for patterns in huge troves of data — such as what we say to our voice assistants or what images we post on social media. These systems can share the same gender or racial prejudices found there.

Such misfires have increasingly attracted attention. A rogue Microsoft chatbot spouted sexist and racist remarks. A Google app to match selfies to famous works of mostly Western art lumped many non-whites into the same exoticized figures. In another example, a study looking at several prominent AI systems for recognizing faces showed that they performed far better on lighter-skinned men than darker-skinned women.